Gesture Enhancement of Virtual Agent Mathematics Tutors

NSF Awards: 1321042

2016 (see original presentation & discussion)

Grades K-6, Grades 6-8

People learn through interacting with the world. Yet when computers first entered educational practice, they provided limited forms of interaction, because the user-interfaces were not yet sophisticated enough. But now we have natural user-interfaces such as Wii, Kinect, and touchscreen tablets that are once again allowing educational designers to build activities in which students interact with objects. Often these are virtual objects, but they elicit and shape essentially the same perceptual and action patterns as what we find with concrete objects. What about virtual teachers? We want virtual teachers to be able to speak, gesture, and manipulate the virtual objects and gesture about these actions. Animation software now enables us to create virtual characters that move their hands in ways that look quite naturalistic. The virtual teacher needs a virtual brain, too. State-of-the-art artificial intelligence is now allowing students to receive customized feedback from software as they interact with technology. All these innovations have created an opportunity to design an interactive human-like teacher or “pedagogical agent” that intelligently and responsively supports the mathematical learning of students as they engage in interactive work with virtual objects in a computer interface. A collaborative project of UC Davis (Neff) and UC Berkeley (Abrahamson) is developing and evaluating a pedagogical agent that is inserted into an interactive learning environment, the Mathematical Imagery Trainer.

Related Content for Building a Virtual Teacher

-

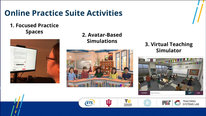

2021Simulation and Virtual Reality Tools in Teacher Education

2021Simulation and Virtual Reality Tools in Teacher Education

Jamie Mikeska

-

2019SAIL: Signing Avatars & Immersive Learning

2019SAIL: Signing Avatars & Immersive Learning

Lorna Quandt

-

2020Signing Avatars & Immersive Learning

2020Signing Avatars & Immersive Learning

Lorna Quandt

-

2020Connecting Students to Earth SySTEM through Mixed Realities

2020Connecting Students to Earth SySTEM through Mixed Realities

John Moore

-

2016Learning Computational Thinking Through Creative Movement

2016Learning Computational Thinking Through Creative Movement

Sophie Joerg

-

2020Use of 360 Video in Elementary Mathematics Teacher Education

2020Use of 360 Video in Elementary Mathematics Teacher Education

Karl Kosko

-

2018EcoMOD: Blending Computational Modeling with Virtual Worlds

2018EcoMOD: Blending Computational Modeling with Virtual Worlds

Amanda Dickes

-

2019Simulated Classrooms as Practice-Based Learning Spaces

2019Simulated Classrooms as Practice-Based Learning Spaces

Jamie Mikeska

Elissa Milto

Director of Outreach

Hi, I find this concept of an interactive virtual teacher fascinating. Do you have a working prototype of Maria? Would love to hear about the interactions she’s had with learners and changes you’ve made to your design.

Dor Abrahamson

Associate Professor

Hi Elissa,

Thanks for this question.

We are still building Maria. Currently she is in what is called the “Wizard of Oz” stage [sic!]. That means that she can register input, and she has a wide array of output, but she still needs a middle person to connect input to output. So that’s what you’re seeing in these videos — my graduate students are playing wizard, and the children are being very patient with the lags.

We hope Maria will be ready within about one year of work. Meanwhile if you’re around Berkeley and would like to meet Maria, you’re welcome to our lab.

- Dor

Arthur Camins

Director

Dor,

This is fascinating and, of course, provocative. I gather the virtual teacher is in development. However, I wonder going into the project, what were your hypotheses about the advantages and disadvantages about of the pedagogical agent over a human?

Thanks,

Arthur

Dor Abrahamson

Associate Professor

Dear Arthur,

NSF is funding the Maria project mainly because it will enable us to study how nuances of gesture impact conceptual comprehension. That said, the biggest advantage of a virtual teacher is the same as any online content — it is there for you — whoever, wherever, whenever — and it can only get better through data aggregation, machine learning, and design iteration.

I might add humbly that what I’m learning perhaps the most through this project is what human teachers are capable of doing. We are trying to simulate, emulate, perhaps augment. But certainly the availability factor trumps all other design motivations.

Thanks for being involved in the conversation,

- Dor

Sarah-Kay McDonald

Principal Research Scientist

Thanks very much for sharing this overview of ‘Maria’ and the ideas regarding the importance of interaction & availability that influenced her development. I’m particularly interested in learning more about how the project builds on, and is anticipated to contribute to, extant research on how gesture may affect conceptual comprehension at various stages in the learning process. Could you share a little more about the contributions the project hopes to make in this area?

Dor Abrahamson

Associate Professor

Hi Sarah-Kay,

One of the methodological challenges of gesture experiments is the inconsistency of input. That is, we have to rely on humans to perform the actions that constitute the independent variable, and yet it is difficult for humans to perform these complex multimodal coordinations. With a virtual teacher we can have the precise same gesture across all subjects. Moreover, at a flick of a button we can customize the gestures so that only a particular type of gesture is used and at a specified frequency. For example, we could set the virtual pedagogical agent to use only deictic (pointing) gesture but no mime, or vice versa.

- Dor

Sarah-Kay McDonald

Principal Research Scientist

Fascinating! I can imagine lots of interesting studies in this vein (including, potentially, research examining differences in outcomes with consistent and ‘inconsistent’ (potentially, adapted to fit the learning situation?) gesturing…) Does your team have any current plans to use Maria in this way (or thoughts on a timeframe in which others might be able to use this interactive virtual teacher for this purpose)?

Dor Abrahamson

Associate Professor

Well, Maris is funded by NSF because they want “her” to help the field improve on gesture-study methodology. Currently we are doing mostly the nuts-and-bolts work of getting “her” up and running. Once that is done, we could then put her to work doing the basic-science she was “recruited” for, even as she can keep a side job as a teacher…

- dor

William Finzer

The boundary between virtual and real blurs even further! This is amazing, ambitious work, and your presentation draws attention to teacher gestures, that I confess, I’ve hardly given any thought to! What are some examples of situations and gestures you have been able to give Maria thus far?

And what do you think of Disney’s Jungle Book virtual world? ;-)

Dor Abrahamson

Associate Professor

Hey Bill,

Thanks, and, yes, “ambitious” is the kind word, “foolhardy” better captures it. But then again, without ambition we’d never have Fathom, right?!

We know that naturalistic communication is multi-modal, and yet we haven’t had much of that capability in the past. Maria will hint to various strategies for manipulating the two cursors, such as moving them simultaneously upward (Rate A in Left hand + Rate B in Right hand) or ratcheting them sequentially upward (Left hand up A units, and only then Right hand up B units, and then iterate). She can gesture toward regions (aka “fertile grounds”) where the students might search for locations that generate the goal state (making the screen green), or she might gesture to particular features of the screen that are critical for a successful manipulation (e.g. the vertical interval between the hands). There is more here (http://edrl.berkeley.edu/content/gevamt-0), and it all started with this (http://edrl.berkeley.edu/content/kinemathics).

As for Disney, I’ll really need to wait for my kids to drag me to that. Rather INto that…

take care,

- Dor

David Farina

Hello Dor,

My name is Dave Farina and I am a high school science teacher and planetarium director in Lancaster, PA. I am currently working on a grad class and was asked to visit this site and find videos that were of interest to me, and to reach out to the presenters to find out more about one of the projects. This project caught my eye as I felt like it was both scary as well as exciting. It is scary to me as it could spell the end of me as a teacher if this technology somehow was able to advance to the point of replacing the classroom teacher with a computer. It was also exciting because it reminds me a lot of the holographic projections programmed in Star Trek. At some point, there needs to be a programmer that has the necessary content knowledge to do the programming. Also, there needs to be someone for whom the models are “modeled” after. That got me thinking… I would love to speak more about this with you.

Dor Abrahamson

Associate Professor

Hey David,

Thanks for reaching out. I could chat with you in person, if you like. Next week, perhaps.

And, no we do not want to replace teachers!! I make that point very early on in this talk: https://www.youtube.com/watch?v=Hmdg-PN97PE , where I refer to neo-Luddism. In fact, what I’ve learned most from trying to build an artificial teacher is how awesome human teachers are, that is, how crazy-difficult it is to create artificial intelligence that does anything near the rich, intuitive, resourceful, and compassionate work I see teachers do. It’s about timing, those particular smiling quizzical looks, turn of phrase, cadence, customization, gentle argumentation, revoicing … The list is endless.

And so I invite you to think of Maria as nothing more (yet, heck, nothing less) than an animated interactive agent who can reach more girls and boys out there — whoever, wherever, whenever — who are learning concepts. So it’s Siri on meth with a math mission.

And yes, you’re right — Our simulated teacher is only as good as what we know teachers to be and do. Sure, the computer has an inexorable memory and rapid responses, but as far as pedagogical tactics go, it’s a only a mirror of what we know. And so, in fact, if you look at the publications linked here, http://edrl.berkeley.edu/content/gevamt-0 , you will see our process: First we had a previous project in which I taught kids how to interact with our Mathematical Imagery Trainer. Then Virginia acted out all my gestures wearing an action capture suit. Then her gestures were “dressed” onto Maria (using Pixar-style tech, over at UC Davis). Then Alyse spoke out all the key phrases I had used. And so you see Virginia’s body moving in Dor gestures and talking with Alyse’s voice, all orchestrated by Dr. Neff from Davis. Maria literally is all of us. Weird? Certainly. We are Maria!

best,

- Dor

Jenna Mercury

This is such an interesting idea! Our program has a lot of homeschoolers and something we always think about is the idea that they might not always have an educator/parent nearby while they are learning. As a previous classroom educator, I can certainly say that I always wished to duplicate myself! There are many components to a typical classroom and with that, many moments when additional educators should be present but are not! What is the timeline for this project?

Dor Abrahamson

Associate Professor

Thanks, Jenna. Tough call on product-ready timeline. And we’re essentially ‘basic-science’ researchers working more so on in-principle questions than we are ed-tech mavericks who can churn out the polished artifact. That said, I would venture to say that 3 years from now, this — or something version thereof — should be ready off the virtual shelf.

And, yeah I hear you about cloning yourself. One of the metaphors I use in my pre-service teacher-education courses is that of simultaneous chess. That’s when a grand-master plays up to 50 people at the same time (!). Going around the room from board to board, she hovers for a moment, figures out the situation at a glimpse, makes a move, and walks on to the next board — over and over.

- Dor

Arthur Camins

Director

I’d like to probe a bit more about several questions that come to mind:

1) There seems to be widespread agreement that accurately interpreting student thinking along a learning progression and using that information for feedback is essential to support their learning, but also very challenging. In building the agent how do you verify interpretation?

2) Do you have a theory of action about differentiating which features of learning are the best candidates for virtual intervention and which live face-to-face.

Thanks,

Arthur

Dor Abrahamson

Associate Professor

Hi again, Arthur.

True, conjecturing as to what a student is thinking is indeed the Holy Grail — well, Achilles Heel… :) — of this AI endeavor. The particular learning activity that is implemented in our environment is of the type ‘Mathematical Imagery Trainer’ that my lab has been developing since 2008. (see here http://edrl.berkeley.edu/content/kinemathics in ascending order of publications). The activity is based on a conceptualization of mathematical knowing as a sensorimotor enactment. That is, to learn a mathematical concepts kids need essentially to learn how to move in a new way. There are nuanced variations on this new way of moving, and by logging the kid’s actions we can guess his thoughts. This prediction from action to thought is based on a slow design process of model building, in which we use clinical techniques (talking to the kid) to ascertain how they are thinking. It gets to the point that the AI can guess better and better what the kid is thinking on the basis of how she is moving her hands on the screen. By way of rough analogy (well, very rough), when a vendor talks through their shop math, you can tell how they perform a calculation (e.g., “Ok, so 16 cents gives us 8 dollars, another 2 dollars is 10 and another 10 is your change from 20” tells us he subtracts by adding up).

- Dor

Andrew Izsak

Hi Dor,

This is an interesting project. One thing hinted at but not developed in the video is connections between gesture and communicating about proportional relationships. Are there aspects of gesture that you find particularly compelling for this content, or are gestures viewed as a class of resources used across content?

Andrew

Dor Abrahamson

Associate Professor

[I should have hit Reply last time…]

Hi Andrew,

We view gestures as emerging from action, as mimed / contracted actions (‘marking’) among members of a community of practice with share experiences (so that the gesture becomes a sign). In publications coming out of my dissertation work I documented how concept-specific gestures are lifted off mathematical representations, sometimes due to the architectural constraints of a child pointing remotely, from her seat, to a large display on the blackboard. For example, “proportion” in that project (but not in this) was a pair of hands cascading downward in scalloped coordinated arc motions, each signifying an iterated composite unit, e.g,. 3 and 7, because that came from running down two parallel columns of the multiplication table. -

In the current project, which began in 2008, proportion becomes gestures as two hands rising at different rates. -

Interestingly, because we are working with natural user interfaces where mathematical reasoning is an embodied enactment, the distinction between action and gesture is blurred. So much so that when a person explains to another how they make the screen green, they literally simulate the very same bi-manual actions they had just done on the screen. Only in this discursive performance there is no feedback from the technology, and so we can distill for any strategies developed.

Dor Abrahamson

Associate Professor

Hi Andrew,

We view gestures as emerging from action, as mimed / contracted actions (‘marking’) among members of a community of practice with share experiences (so that the gesture becomes a sign). In publications coming out of my dissertation work I documented how concept-specific gestures are lifted off mathematical representations, sometimes due to the architectural constraints of a child pointing remotely, from her seat, to a large display on the blackboard. For example, “proportion” in that project (but not in this) was a pair of hands cascading downward in scalloped coordinated arc motions, each signifying an iterated composite unit, e.g,. 3 and 7, because that came from running down two parallel columns of the multiplication table.

-In the current project, which began in 2008, proportion becomes gestures as two hands rising at different rates.

-Interestingly, because we are working with natural user interfaces where mathematical reasoning is an embodied enactment, the distinction between action and gesture is blurred. So much so that when a person explains to another how they make the screen green, they literally simulate the very same bi-manual actions they had just done on the screen. Only in this discursive performance there is no feedback from the technology, and so we can distill for any strategies developed.

- Dor

Elizabeth McEneaney

Very exciting work, Dor. I’m wondering how difficult it will be to program Maria to help students think flexibly about mathematical concepts, supporting divergent ways of solving problems, etc?

Dor Abrahamson

Associate Professor

Dear Elizabeth,

Thanks so much for this question. In fact, diverse approaches to solving mathematical problems is a key principle of our design approach. If you look here, http://edrl.berkeley.edu/content/kinemathics , the fifth image down, you will see a figure that features different approaches to one and the same problem. We cater to any approach responsively by identifying it and offering appropriate support. Our ultimate objective is for Maria to challenge students to coordinate among different approaches as a means of fostering productive struggle toward coordinating additive and multiplicative solutions. This paper, http://edrl.berkeley.edu/sites/default/files/Ab..., explains more about that objective. And so, yes, different approaches — both intra-student and inter-student — and then strive to reconcile these different approaches logico-mathematically.

- Dor

Further posting is closed as the event has ended.