- Nonye Alozie

- Senior Education Researcher

- Presenter’s NSFRESOURCECENTERS

- SRI International

- Svati Dhamija

- Advanced Computer Scientist

- Presenter’s NSFRESOURCECENTERS

- SRI International

Choice

Automated Collaboration Assessment Using Behavioral Analytics

NSF Awards: 2016849

2021 (see original presentation & discussion)

Grades 6-8, Grades 9-12, Undergraduate, Graduate, Adult learners, Informal / multi-age

The Automated Collaboration Assessment Using Behavioral Analytics project will measure and support collaboration as students engage in STEM learning activities. Collaboration promotes clarifications of misconceptions and deeper understanding of concepts in STEM which prepares students for future employment in STEM and beyond. The 21st century skills and STEM learning frameworks (e.g., A Framework for K-12 Science Education and Common Core State Standards) include collaboration as a necessary learning skill in K-12 STEM education, yet teachers have few consistent ways to measure and support students’ development in these areas. This project will result in both an improved understanding of productive collaboration and a prototype instructional tool that can help teachers identify nonverbal behaviors and assess overall collaboration and engagement quality. Using nonverbal behaviors to assess engagement will decrease dependence on discourse and content-based dialogue and increase the transferability of this work into different domains. This project is particularly timely as the ability to collaborate and engage in group work are growing requirements in professional and learning settings; at the same time the very act of collaboration is being disrupted by the Coronavirus pandemic and there is a high likelihood that much of this “new normal” (social distancing; combining in-person and remote collaboration) will be with us for some time. This project will meet the urgent need currently felt by educators and educational institutions to support the development of collaboration skills among students, even as the very act of collaboration is shifting and non-traditional forms of education are taking hold.

Related Content for Understanding Group Interactions in STEM

-

2021Tomorrow's Community Innovators

2021Tomorrow's Community Innovators

Virginia Davis

-

2021ANSEP Innovation and Success during COVID

2021ANSEP Innovation and Success during COVID

Herb Schroeder

-

2016Data Exploration Technology Comes to Classrooms via CODAP

2016Data Exploration Technology Comes to Classrooms via CODAP

William Finzer

-

2022Impact of Student Perceptions and Roles on Collaboration

2022Impact of Student Perceptions and Roles on Collaboration

Nonye Alozie

-

2020Engineering: Beyond the Numbers

2020Engineering: Beyond the Numbers

Eric Schearer

-

2019Towards Increasing Native American Engineering Faculty

2019Towards Increasing Native American Engineering Faculty

Sherri Turner

-

2017PhET Simulations and Auditory Descriptions

2017PhET Simulations and Auditory Descriptions

Emily Moore

-

2018International Community for Collaborative Content Creation

2018International Community for Collaborative Content Creation

Eric Hamilton

Playlist: Cyberlearning Videos

-

2021Understanding Group Interactions in STEM

2021Understanding Group Interactions in STEM

Nonye Alozie

-

2020Signing Avatars & Immersive Learning

2020Signing Avatars & Immersive Learning

Lorna Quandt

-

2021Wearable Learning Cloud Platform (WLCP)

2021Wearable Learning Cloud Platform (WLCP)

IVON ARROYO

-

2021Multicraft: A Platform for Collaborative Learning

2021Multicraft: A Platform for Collaborative Learning

Kevin Mendoza Tudares

-

2021Building Community to Shape Emerging Technologies

2021Building Community to Shape Emerging Technologies

Judi Fusco

-

2020Multimodal Machine Learning for Automated Classroom Feedback

2020Multimodal Machine Learning for Automated Classroom Feedback

Jacob Whitehill

-

2020Developing Computational Thinking through Game Design & Play

2020Developing Computational Thinking through Game Design & Play

Ivon Arroyo

Nonye Alozie

Senior Education Researcher

Good day, everyone! My name in Nonye and I am a senior education researcher in the Center for Education Research and Innovation at SRI International. Thank you for watching our video. Our NSF work is completing it's first year (of a two year project), although this work this work has been developing on different fronts for over 2 years. We are looking forward to conversations about how others (whether you are a researcher or practitioner) might characterize, measure, and provide feedback on collaboration. We are also interested in learning more about the different ways others use machine learning to facilitate such assessments to enhance teaching and learning. We are open to potential research and thought partnerships. Thanks everyone!

Bernard Yett

Cristo Leon

Cristo Leon

Dear Nanye,

Will it be possible to obtain a sample of the rubric for collaboration?

Nonye Alozie

Senior Education Researcher

Hi Cristo,

Thank you for asking. We are still refining, validating, and improving our rubric and will be prepared and happy to share it at a later date. We will make it available in the coming months.

Judi Fusco

PATRICK HONNER

Teacher

Hi-

Very interesting! It seems like you are attempting to use computer vision and machine learning to evaluate body language and facial expressions (is that right?). I’m curious: How do you decide which behaviors are associated with collaboration and which aren’t? And is the underlying assumption that there exist universal behaviors associated with collaboration?

I’m also wondering how this could be used by teachers. Can you talk a little more about what you think teachers could do with this information?

Nonye Alozie

Nonye Alozie

Senior Education Researcher

Hi Patrick,

This is a great question and one that we get all of the time. It is a question that we try to take great care in! Yes, we are using body language and facial expressions to identify collaborative, and not so collaborative behaviors. It is important to note that how collaboration is defined and characterized differs from person to person- right? If you ask 10 people, you will most likely get 10 different answers. Because of that, we spent a significant amount of time surveying the literature and all kinds of research on how people determine what is constructive and detrimental to collaboration. We used research in computer mediated collaboration space, the military (which uses collaboration in high stress situations), and various other collaboration practices and theories, like T7 model for team effectiveness, Team Basics Model, Five Dynamics of Teamwork and Collaboration Model, Conditions for Team Effectiveness Model, Five dysfunctions of a Team Model, Shared Plans theory and Joint Intentions Theory-- just to name a few. We spent a lot of time doing an analysis of the research to develop a framework and pull many of these ideas together into observable practices.

I cannot say that the there are universal behaviors associated with collaboration, but we have use research to inform our work, and continue to build on that work as we learn and read more. In addition, we perform validation tests on our rubric and framework using expert reviews and disconfirmation approaches for that very reason. The hope is that we can get different viewpoints (research based, experts, student surveys, etc) about what collaborative behaviors look like. Furthermore, we are working to diversify our participant population. Students are volunteers, which instantly biases the data, but we are reaching out to participants across the country to obtain a diverse population to help provide variety. With that said- we are aware that our participants are neurotypical. There is some other work being done in our research group, not related to this project (yet! :)) that works with this population. But, we are not yet incorporating that line of work into this project at the moment.

In terms of teachers using this- we really hope so! In our pilot of the work, teachers were excited about an instructional resource like this; something that could help make their already difficult jobs a little easier. The idea is that teachers would be given information on individual student behaviors that lead to an overall collaboration quality. The teacher would also get information on the group quality. Teachers would then use that information to help students continue to strengthen their contributions to the contributions to the group setting by either increasing or minimizing certain behaviors. We are working on a recommendation system that will provide the teachers with suggestions about what to highlight and what to minimize. However, we do not want to replace teachers- we want this to be something that helps them.

I hope this helps!

Bernard Yett

patrick honner

PATRICK HONNER

Teacher

Thanks for the detailed response. It was indeed helpful! I assumed that the underlying theory was subtle and complex, and you've given me a very nice overview of the thinking that has gone into this.

It's still not entirely clear to me how I might use this information as a classroom teacher, but it's certainly an interesting direction for research.

Nonye Alozie

Nonye Alozie

Senior Education Researcher

Hi Patrick, Thank you for pushing us with this question. The goal is to make sure that teachers find this sort of thing useful. We are still developing utility cases for this so that we can provide a more realistic and implementation-based response, but in theory, this is an example of what we envision:

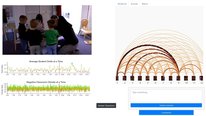

Students are working in groups during class- let's say they are working on an online activity. While working on the activity, each student has a camera that records their interactions for the duration of the activity. While the activity is being captured, the behaviors are being processed and analyzed using machine learning models that have been trained on diverse data. The machine learning models use this data as input to determine how each students is performing on different levels. For example-What role is Student 2 playing? What role was Student 3 playing? The machine learning models also analyze the input data to determine how well the group is doing. For example, is the group working effectively together; where they are sharing ideas equally and displaying respectful interactions while doing so?

The teacher gets that information and determines how much support to give each student and the group as a whole. For example, the teacher might see that Student 1 was offtask for most of the activity. The teacher can then talk directly to the student and provide appropriate supports for them. The recommendation system will try to help the teacher by providing suggestions of the kinds of interactions that Student 1 might want to do more of. For example, the recommendation system might say that Student 1 should perhaps spend more time following along or helping coordinate the group. The teacher may use that suggestion as part of their conversation with Student 1.

This still down the line, but it is the direction we are heading.

I hope this helps! Please let me know if you have other thoughts.

patrick honner

Marion Usselman

Associate Director, and Principal Research Scientist

Good morning, Nonye. Thanks for posting your video about this very interesting work. I can see immediate practical use for researchers assessing the level of collaboration in groups. Can you describe some of the non-verbal factors that the tool is recording and analyzing? I'm curious how consistent "body language" is among students as they collaborate in group settings. How many categories of roles have you developed?

Nonye Alozie

Nonye Alozie

Senior Education Researcher

Hi Marion,

Thanks for your question. Patrick (above) asked a similar question, so I will repeat some of my response here. It is important to note that how collaboration is defined and characterized differs from person to person. Because of that, we spent a significant amount of time surveying the literature and all kinds of research on how people determine what is constructive and detrimental to collaboration. Our work is informed by research in computer mediated collaboration space, the military, and various other collaboration practices and theories, like T7 model for team effectiveness, Team Basics Model, Five Dynamics of Teamwork and Collaboration Model, Conditions for Team Effectiveness Model, Five dysfunctions of a Team Model, Shared Plans theory and Joint Intentions Theory-- just to name a few. We spent a lot of time doing an analysis of the research to develop a framework and pull many of these ideas together into observable practices.

From this we created a rubric that has 3 concepts or dimensions of collaboration, broken down into 6 themes, 17 subthemes, and over 70 classes (behaviors) spread across the subthemes. An example, of this is at the concept called "individual awareness". That is broken down into building collaboration and detracting from collaboration. Those are further delineated into subthemes like information gathering, sharing ideas, and disengaging from the group. The subthemes are further broken down into classes like comforting others, setting up roles, working off-task. We created so many categories to address the fact that each behavior has a function based on the context it is happening in. The categories help provide explainability for function (not intention) of the behaviors as they relate to the overall collaboration process.

In terms of consistency, we perform validation and reliability tests on our rubric and framework using expert reviews, disconfirmation approaches, and Cohen's kappa calculations to achieve and maintain consistency. We are also working to diversify our participant population. Students are volunteers, which instantly biases the data, so we are reaching out to participants across the country to obtain a diverse population to help provide variety of body language in group settings.

Hope that helps!

Brian Gane

Kimberly Godfrey

Hello Nonye!

Thanks so much for sharing your work! I also find this interesting as a major component of my program requires students to work collaborative in most of our activities. It is so important for student to learn these skills and I too am interested is some of the cues you take note of when analyzing collaborative behavior.

Nonye Alozie

Nonye Alozie

Senior Education Researcher

Hi Kimberly,

I see that you are an informal educator. I want to mention that so far, this work is being conducted in a lab setting. So, there is very little noise, in terms of external distractions. Students are working small groups after school or after class. It would be quite interesting to implement this in an informal setting.

In terms of the setting that we are working in, we created a rubric that has 3 concepts or dimensions of collaboration, broken down into 6 themes, 17 subthemes, and over 70 classes (behaviors) spread across the subthemes. An example, of this is the concept called "individual awareness". That is broken down into building collaboration and detracting from collaboration. Those are further delineated into subthemes like information gathering, sharing ideas, and disengaging from the group. The subthemes are further broken down into classes like comforting others, setting up roles, working off-task-- these are the behaviors we identify from the video capture. We created so many categories to address the the fact that each behavior has a function based on the context it is happening in. The categories help provide explainability for function (not intention) of the behaviors as they relate to the overall collaboration process.

Hope that helps!

Jacqueline Genovesi

Kevin McElhaney

Hi Nonye and Svati! Excellent video, well done. How discipline-specific do you think your analytical models are (concerning the topic students are collaborating on)? Do you think they extend across science disciplines? Across STEM? Beyond STEM?

Nonye Alozie

Nonye Alozie

Senior Education Researcher

Hi Kevin! Thanks. Congrats on the submission of your video as well. It looks great.

That would be an interesting study; to determine how well do our collaboration framework and machine learning models transfer across disciplines. Our hope is that the use of behaviors as the driving factor, as opposed to speech, will make our collaboration model and machine learning models transferable to other disciplines within science, hopefully STEM. Because our literature research and analysis of collaboration as a practice was not specific to science, it is possible that it could be transferred to other disciplines outside of STEM, but we don't have data for that. In addition, the tasks that the participants engage in are in life science and physical science, so many of the practices might be science specific, even though the codes used to identify the behaviors seem generic. One of the lines of study on the project is to take a look the relationships between the collaboration activities and the collaboration behaviors. We want to explore what kinds of behaviors might be associated with the different task characteristics. For example, do tasks that require the building of models elicit different kinds of behaviors and interactions? Does the quality of collaboration change? If so, how? Those kinds of questions would be interesting for other disciplines like math, or even social studies.

Hope that helps!

Satabdi Basu

Hi Nonye,

Such an amazing project and video! I'm curious how the behaviors and expressions associated with collaboration compare between in-person classroom settings and virtual settings, and between K-12 students and adult learners. Would love to hear any thoughts and insights you might have!

Nonye Alozie

Nonye Alozie

Senior Education Researcher

Hi Satabdi. Congrats on your video submission. Nice work to you, too!

This is such a good question. We have spent all of this year working on this transition. We know that working online generates similar behaviors, but there are also many changes that occur. We learned that, for example, exhibiting attentiveness in person will look different from when working online. In a face to face setting, an attentive participant will most likely look at their peers directly. In an online setting, everyone is looking at the screen, so it becomes harder to tell. We had to pay more attention to minute changes in behavior, such as head positioning and eye gaze. In an online setting, you can usually tell if someone is looking at their cell phone vs paying attention to the task being completed based on where their eyes are looking and the way their head is tilted.

In terms of age, we didn't find much differences in age-based interactions, surprisingly. There is an article that said that after the age of 15, collaboration can be recorded successfully. However, because the middle school students that we started with were all volunteers, we had biased data and their behaviors tended to conform in a particular direction. We are still exploring this question as we collect more data.

Hope this helps!

Satabdi Basu

Brian Gane

Fascinating and cutting-edge work Nonye and Svati!

I'm interested in the model accuracy at the level of individual and group assignments. One of the screenshots appeared to show classification at the level of individual's behaviors and then also at the level of group dynamics/roles. How interdependent are these levels in your model (and does measurement error propagate)? Are you able to more accurately or reliably classify at one level than the other?

Nonye Alozie

Nonye Alozie

Senior Education Researcher

Hi Brian!

My colleague Anirudh is better equipped to provide this information in the next day or two. But in short, we are looking at accuracy in 2 ways: from the collaboration conceptual model perspective and from the machine learning perspective. We are conducting validation studies on our model and rubric. So far, we have been able to reach reliability agreement above 90%. Our Kappa scores for reliability have been hovering around 60%- if I remember correctly. Our validity emerges from the results of the reliability scores (using a disconfirmation approach thus far), leading to refinements to the conceptual model and rubric. In terms of the machine learning models, our F1 score performance is between 80-85%. So, we believe that we are achieving good accuracy in both perspectives.

yes- we code at individual and group levels. We code them independently to avoid bias in the coding and to avoid propagating error. The levels are designed to be interdependent (one level will build to the next) but they are coded independently.

Yes, we have found that levels that require more nuance have lower accuracy than levels that look more specifically at gestures and movements.

Hope that helps!

Brian Gane

Anirudh Som

Hi Brian!

Thank you for finding our work fascinating! As Nonye mentioned, currently we observe the F1 score performance to be around 80-85% for both individual roles and behaviors. Details about the data curation, data processing and machine learning modeling steps can be found in the following paper: https://arxiv.org/pdf/2007.06667.pdf. This paper was published last year and describes our initial attempt at using machine learning to automatically assess group collaboration quality from individual roles and behavior annotations. For the feature representation described in our paper, we did find that it was slightly easier to estimate collaboration quality from individual roles than from individual behaviors.

Since then we have developed more sophisticated machine learning models that offer more explainability and insight. Our most recent work, accepted in the EDM 2021 conference, will soon be made available in the open-access proceedings: https://educationaldatamining.org/edm2021/accep...

Brian Gane

Brian Gane

Very helpful, thanks for the detailed explanations Nonye and Anirudh, and linking the paper! Sounds like very positive results in terms of accuracy and reliability so far. Nice to see such a strong emphasis on a conceptual model to drive the rubrics and validation work!

Nonye - can you explain more about the disconfirmation approach you use?

Nonye Alozie

Nonye Alozie

Senior Education Researcher

Hi Brian. In short, it looks for cases where the researchers might disagree on whether something fits with the larger idea of the study. We look for disconfirming cases. Here is a paper that describes it:

Booth, A., Carroll, C., Ilott, I., Low, L. L., & Cooper, K. (2013). Desperately seeking dissonance: identifying the disconfirming case in qualitative evidence synthesis. Qualitative health research, 23(1), 126-141.Brian Gane

Kemi Ladeji-Osias

This is very interesting work, Nonye and Svati! I have a couple of questions:

Nonye Alozie

Nonye Alozie

Senior Education Researcher

Hi Kemi,

Nice to see you here!

As of now, we have been looking at science activities. When we started the work, students worked on model building projects face to face, so our coding rubric was designed to capture hands-on activities and behaviors. With the transition to online settings (due to COVID and other educational changes), we modified our rubric to capture online interactions, where the participants solve problems virtually. We tested various platforms and performed some case studies before completely transitioning.

Yes- the rubric uses both verbal cues and behavioral cues. In fact, in the first year of our study, we tested whether the use of behavior alone was at least as good as using both verbal and behavioral interactions. We found that, of course, using both verbal and nonverbal interactions was more accurate than nonverbal alone, but our reliability between the two modalities was in the moderate Kappa range- which is a much more strict reliability calculation. This showed us that the rubric was working. Moving forward, we are experimenting with the use of verbal interactions as part of the machine learning training.

Khyati Sanjana

Senior Manager

Nonye and Svati such interesting work on group collaboration.

I am curious to know how do you plan on diversifying your groups? Does your model consider the variety of non-verbal behaviors based on cultural, socioeconomic, or contextual influences?

Nonye Alozie

Nonye Alozie

Senior Education Researcher

Hi Khyati

This is a wonderful question. We keep this as part of our work constantly. We do a few things to diversify our groups. Students are volunteers, which instantly biases the data, but we are reaching out to participants across the country to obtain a diverse population to help provide variety. We reach out to groups that have diverse student populations and our network system that works with various groups of people. We have used research to inform our work, and continue to build on that work as we learn and read more. Our coders are also trained with the rubric to ensure that bias is recognized and addressed. In addition, we perform validation tests on our rubric and framework using expert reviews and disconfirmation approaches for that very reason. The hope is that we can get different viewpoints (research based, experts, student surveys, etc) about what collaborative behaviors look like.

With that said- we are aware that our participants are neurotypical. There is some other work being done in our research group, not related to this project (yet! :)) that works with this population. But, we are not yet incorporating that line of work into this project at the moment.

I hope that helps!

Marcia Linn

Hi Nonye, This is fascinating work. I am wondering how the varied indicators interact with issues of status? Are the nonverbal dimensions helpful in determining when status factors interfere with collaboration? or facilitate it?

Thank you, Marcia

Nonye Alozie

Nonye Alozie

Senior Education Researcher

Hi Marcia. Thanks for your question. Can you explain what you mean by status? Are you referring to SES? Or group dynamics related to hierarchy? Or something else?

Thank you!

Lindsay Palmer

Excellent work and what a great approach to supporting teachers. I'd be interested in your machine learning approach and what sorts of nonverbal behaviors you have identified? Thank you for your video!

Nonye Alozie

Nonye Alozie

Senior Education Researcher

Hi Lindsay-

Can you say a little more about what you are interested in concerning the machine learning approach? For example, are you interested in how the machine learning models are trained? How the data is analyzed? Something else? I will also bring in Anirudh, one of the new co-PI's, to help answer this question.

In terms of the nonverbal behaviors we use, we created a rubric that has 3 concepts or dimensions of collaboration, broken down into 6 themes, 17 subthemes, and over 70 classes (behaviors) spread across the subthemes. An example, of this is the concept called "individual awareness". "Individual awareness" is broken down into building collaboration and detracting from collaboration. Those are further delineated into subthemes like information gathering, sharing ideas, and disengaging from the group. The subthemes are further broken down into classes like comforting others, setting up roles, working off-task. We created these categories to address the fact that each behavior has a function based on the context it is happening in. The categories help provide explainability for function (not intention) of the behaviors as they relate to the overall collaboration process. Int he upcoming months, we will be sharing our rubric, which will hopefully provide more details and useful information!

I hope that helps.

Nonye Alozie

Senior Education Researcher

I would also like to introduce Anirudh Som. He is one of the new co-PIs of the project. he will be answering questions about the machine learning as they come in. Thanks everyone!

Laura Kassner

Excited for your work and proud to be your colleague, Nonye!

Nonye Alozie

Nonye Alozie

Senior Education Researcher

Thank you, Laura!

Suzanne Ruder

Thank you for sharing your work! It is great to see that skills like teamwork and communication are being stressed at the K12 level.

The ELIPSS project has developed rubrics to be used in STEM classes across disciplines. (www.elipss.com). Most of our users have been at the college level, and the biggest roadblock is in getting feedback to the students in a timely fashion, particularly in very large classes. Check out our STEM for ALL video.

Nonye Alozie

Nonye Alozie

Senior Education Researcher

Hi Suzanne. Thank you for sharing! I got a chance to browse through your website and watch your video. Very inspiring- such great work! I am looking forward to sitting with all of the different rubrics that you have developed.

We are hoping that our work will lead to a feedback and recommendation system to help with that.

Eric Hamilton

Nonye, great work and very interesting - not surprising. We have a lot of respect for the learning technology research at SRI. I know you are still working on refining your rubric, but wonder if you are interested in or willing to have it used elsewhere. Our project, the International Community for Collaborative Content Creation, is all about understanding the growth of intercultural competencies and academic learning by way of collaboration. The research actually originated in collaborations that began over a decade ago with SRI. We would be very interested in seeing if your work is something we could apply. If you have a chance, please let me know, and of course our video might give additional context. Thank you!

Ning Wang

Totally agree! The social part of the curriculum design is very important and should be more improved. Thanks for sharing!

Nonye Alozie

Nonye Alozie

Senior Education Researcher

Thanks for engaging with our video!

Donna Stokes

Great work! I look forward to the availability of your rubric.

Nonye Alozie

Judi Fusco

Nonye Alozie

Senior Education Researcher

Thanks Donna. We are looking forward to sharing what we learn.

Paola Sztajn

This is very interesting and can be helpful to STEM teachers in all domains and levels. Thanks for sharing.

Nonye Alozie

Nonye Alozie

Senior Education Researcher

Thanks for watching our video!

Khyati Sanjana

Senior Manager

Your work relates so well to the POGIL Project which focuses on collaboration using their framework.

Nonye Alozie

Nonye Alozie

Senior Education Researcher

Thanks! We will have to check that out!

Judi Fusco

Great video, Nonye! I look forward to the rubric, too! (Along with many others, it sounds like!) Thanks for this work.

Nonye Alozie

Nonye Alozie

Senior Education Researcher

Thanks Judi! We hope that the rubric will contribute to the field.

Samuel Severance

Hi Nonye and team! This is fascinating work and has great potential for supporting collaborative learning. I'd be very curious to see how it applies to the collaboration we are seeing in our Learning with Purpose research. A few questions.

1) To further decrease the load on teachers and increase student agency, I'm wondering if you've talked about how students might get access to the "dashboard" analytics themselves so that they can better collectively (self)regulate their own collaboration?

2) Given how collaboration is mediated by tools, I'm wondering if/how you're incorporating streams of data on the artifacts that students are constructing (e.g., a slide presentation) or the tools they are using (e.g., objects students could interact with on the desk seen in the video at 1:46) into your model.

Nonye Alozie

Nonye Alozie

Senior Education Researcher

Hi Sam,

1) Yes. our long term goal is to be able to provide the students with their own feedback individually and as a group. The goal is to give the teachers AND students feedback so that students can monitor themselves.

2) Are you asking if we are also analyzing the quality of their products that emerge from the collaboration? Yes- one line of our work is to look at the relationship between the artifact quality and the collaboration quality. We spent a lot of time designing the collaboration tasks so that we could analyze how different task features and resulting artifact may relate to different collaboration behaviors and/or interactions.

We also have codes aimed specifically to capture the use of tools as they collaborate. So, we incorporate how they interact with the collaboration tools- for example, are they contributing to the group effort; are they just fidgeting with the tools? What the video doesn't show is that the students are working on open ended questions that require them to build models. They are using paper manipulatives to create things like molecular models- these serve as the tools they are using.

I hope that helps!

James Callahan

Dr. Alozie,

Thank you for the marvelous video on this fascinating subject. You are doing quite interesting and powerful work indeed.

I'm among those who will be checking back, interested on what motions and actions the automated systems look for as indications of collaboration, or lack thereof. I know well many common indicators, but remain one of your students in terms the breadth and depth of wisdom on this subject. So intriguing what you present.

What you have developed is certainly of interest in our program. Fostering collaboration and social problem solving is absolutely one of our goals. It's part of the feedback we look for when beta testing new labs, and continuing to improve and refine old ones.

The Mobile Climate Science Labs are invited to a wide spectrum of venues, so we are constantly adapting and customizing experiencing. Happily. We take part in several of the largest STEM events in the the United States (specific science festivals), field trip hubs, and youth summits, to name a few -- in addition to inside schools themselves.

We pay a great deal of attention to the student/participants experiences. Creating a highly engaging environment. Being sure that the experiences bring forward the enthusiastic involvement of young women and students of color. (Not events that are White male dominated; to the contrary, very collaborative and usually quite integrated.) Much takes place on the spot -- taking cues looking from peoples' facial reactions, body language and social interactions. As well as questions and comments of course. Studying multi-camera video footage after the events is invaluable, in addition.

However, as in the classroom, there is just too much going on all at once for us to keep up with the majority of the interactions. We typically have multiple labs and demos running at the same time. Hundreds, and often thousands, of students take part a day. So, it's very interesting indeed to learn from what the automated systems are able to do.

Question: Has you program studied collaboration among students in events hosted by various organizations in the informal settings?

When it comes to fostering outstanding collaboration, there are some organizations that are amazing an outstanding. For instance, event hosted by the Girl Scouts tend to be highly collaborative. In San Francisco, the Girl Scouts hold events that provide hands-on STEM for thousands of students, and hundreds of teams of Girl Scouts.

Have you found that there is much to be learned from how different informal education programs provide presentations on STEM? When highly collaborative environments are created, how was this done successfully?

Amazing work you are doing. Thank you!

James Callahan

A second question if I may:

Have the automated systems studied STEM learning in multi-generational settings?

We frequently take part in STEM education settings that are geared to families. There are incredible dynamics there. How different members of the family take part. Multi-generational events are powerful opportunities to reach adults, as well as young people. (A big measure for us is how parents will often stand back at first with arms crossed. Yet, when they see how engaged their kids or grand-kids are in the science labs, the adults are compelled to join in the fun. The arms quickly unfold and it becomes groups of multi-ethnic families all working together.)

Very much interested what you have learned and developed with regard to multi-generational learning environments.

Thank you!

Valerie Fitton-Kane

Hi Nonye, This is fabulous! For 35 years, Challenger Center has designed programs that focus on in-person collaboration within K-12 student groups. We always assess collaboration in our programs and have, over the years, evolved the tools we use to do that. But, we'd be interested in learning more about your tools. It sounds like it was developed for teachers, but do you anticipate program evaluators using this tool, as well?

Oludare Owolabi

Thank you so much, this work is similar to the UPSCALE pedagogy that was developed for Physics.

Further posting is closed as the event has ended.