- Christina Krist

- Assistant professor

- Presenter’s NSFRESOURCECENTERS

- University of Illinois at Urbana-Champaign

- Nigel Bosch

- https://pnigel.com

- Assistant Professor

- Presenter’s NSFRESOURCECENTERS

- University of Illinois at Urbana-Champaign

- Cynthia D'Angelo

- https://cynthiadangelo.com

- Assistant Professor

- Presenter’s NSFRESOURCECENTERS

- University of Illinois at Urbana-Champaign

- Erika David Parr

- Postdoctoral Researcher

- Presenter’s NSFRESOURCECENTERS

- Middle Tennessee State University

- Elizabeth Dyer

- https://www.mtsu.edu/faculty/elizabeth-dyer

- Assistant Director, TN STEM Education Center

- Presenter’s NSFRESOURCECENTERS

- Middle Tennessee State University

- Nessrine Machaka

- https://www.linkedin.com/in/nessrine-machaka/

- Research Assistant

- Presenter’s NSFRESOURCECENTERS

- University of Illinois at Urbana-Champaign

- Joshua Rosenberg

- https://joshuamrosenberg.com

- Assistant Professor, STEM Education

- Presenter’s NSFRESOURCECENTERS

- University of Tennessee Knoxville

Advancing Computational Grounded Theory for Audiovisual Data from STEM Classr...

NSF Awards: 1920796

2021 (see original presentation & discussion)

Grades 9-12

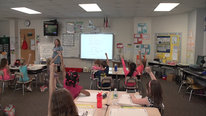

This methodological project aims to develop computational tools that assist qualitative researchers in analyzing video and audio data from K-12 STEM classrooms. In this video we present some early uses of these tools to analyze positioning in moments of joint exploration in a high school math classroom.

Mathematics, Emerging Technologies, Research / Evaluation

UI Urbana-Champ, Middle Tenn State Univ, Univ of TN Knoxville

EHR Core Research (ECR)

Related Content for Theory-based Computational Analysis of Classroom Video Data

-

2020Theory-based Computational Analysis of Classroom Video

2020Theory-based Computational Analysis of Classroom Video

Christina Krist

-

2019Using Machine Learning to Put Teachers in the DRIVER-SEAT

2019Using Machine Learning to Put Teachers in the DRIVER-SEAT

Hilary Kreisberg

-

2017Predicting Student Collaboration from Speech

2017Predicting Student Collaboration from Speech

Cynthia D'Angelo

-

2018The ASSISTments Project and the Future of Crowd Sourcing

2018The ASSISTments Project and the Future of Crowd Sourcing

Neil Heffernan

-

2015Synthesizing Computer-based Scaffolding

2015Synthesizing Computer-based Scaffolding

Brian Belland

-

2015Teaching with an Intelligent Synthetic Peer Learner

2015Teaching with an Intelligent Synthetic Peer Learner

Noboru Matsuda

-

2018Neural Impacts of Classroom-based Spatial Education

2018Neural Impacts of Classroom-based Spatial Education

Nhi Dinh

-

2019Toward a System of Three-Dimensional Classroom Assessment

2019Toward a System of Three-Dimensional Classroom Assessment

Erin Furtak

This video has had approximately 332 visits by 268 visitors from 119 unique locations. It has been played 134 times as of 05/2023.

Map reflects activity with this presentation from the 2021 STEM For All Video Showcase website, as well as the STEM For All Multiplex website.

Based on periodically updated Google Analytics data. This is intended to show usage trends but may not capture all activity from every visitor.

Playlist: ECR-EHR Core Research Videos Playli…

ECR-EHR Core Research Videos Playlist

Videos made by projects funded by the NSF ECR-EHR Core Research program from the 2021 STEM for All Video Showcase.

-

2021Sciencing from Home During the Pandemic

2021Sciencing from Home During the Pandemic

Saira Mortier

-

2021Staying in Science: STEM Pathways through Mentored Research

2021Staying in Science: STEM Pathways through Mentored Research

Rachel Chaffee

-

2021STEM Cascades: Youth as Teachers and Mentors

2021STEM Cascades: Youth as Teachers and Mentors

Eli Tucker-Raymond

-

2021Inclusion and Math Class

2021Inclusion and Math Class

Nathanial Brown

-

2021Keeping the focus on dimensions of quality math discourse

2021Keeping the focus on dimensions of quality math discourse

Paola Sztajn

-

2021Helping States Plan to Teach AI in K-12

2021Helping States Plan to Teach AI in K-12

Christina Gardner-McCune

-

2021INSITE: Understanding STEM Career Readiness of Incarcerated

2021INSITE: Understanding STEM Career Readiness of Incarcerated

Heather Griller Clark

-

2021Virtual PD for STEM Teachers—Lessons for a New Landscape

2021Virtual PD for STEM Teachers—Lessons for a New Landscape

Sarah Michaels

-

2021Big Data from Small Groups

2021Big Data from Small Groups

Asmalina Saleh

-

2021Building Community to Shape Emerging Technologies

2021Building Community to Shape Emerging Technologies

Judi Fusco

-

2021Theory-based Computational Analysis of Classroom Video Data

2021Theory-based Computational Analysis of Classroom Video Data

Christina Krist

-

2021Racial Equity in the STEM Math Pathway in Community Colleges

2021Racial Equity in the STEM Math Pathway in Community Colleges

Helen Burn

-

2021Student Reasoning Patterns in NGSS Assessments

2021Student Reasoning Patterns in NGSS Assessments

Lei Liu

show more

Discussion from the 2021 STEM For All Video Showcase (13 posts)

Christina Krist

Assistant professor

Thank you for your interest in our video! We'd love to hear your feedback and questions about our project. Here are some additional questions that we are interested in discussing:

Qualitative research:

Computational analysis:

Combined analysis:

Andres Colubri

Assistant Professor

Hi Christina, interesting research, thank you for sharing! I wonder how to you plan to incorporate context about that's going on in the class at the moment the videos are taken. I'm asking this as a complete neophyte in this area :-) I'd imagine that interpretation of body poses depends on the activity going on in the class, and even more specific information that only the teacher knows about. So I'm wondering if it would it be possible to annotate the videos and inferred poses in your system. I'm also thinking that the automatic pose inference might make mistakes from time to time (specially in crowded environments such as a classroom) so the teacher would need to enter manual corrections. Thank you!

Nigel Bosch

Assistant Professor

Hi Andres, I can speak to the automatic pose inference part: it definitely makes the occasional mistake! However, the advantage of doing it automatically is the large and fine-grained nature of the video data that can be handled. So, on average, we anticipate that occasional errors will be offset by a volume of correct inferences in surrounding frames of the video -- though that remains to be seen, and we are also considering ways for a teacher/researcher to get involved in the process and evaluate or correct these scenarios.

With respect to context, we do have a bit of additional data regarding the type of activities (e.g., group work, student presentations) going on in the classroom at each point. From the qualitative perspective, this info is certainly relevant to interpretation, though it is a bit tougher to consider in computational analyses. This is an area we plan to explore, however!

Andres Colubri

Assistant Professor

I see, the pose inference occurs on a per-frame basis, so then you get an average or consensus inference over time?

Paul Hur

Hi Andres -- I work with Nigel on doing automatic pose inference work on the project. We still need to explore how the overall poses change over time, but I think it will for sure be an important first step to see if our team's qualitative observations on classroom poses are corroborated by the fluctuations of pose inferences on the computational side!

Michael Chang

Postdoctoral Research

This seems like a very useful tool! I’ve always wondered if/how researchers should use AI in qualitative research. How do you imagine qualitative researchers interacting with this tool? What sorts of analytics do you think are useful to provide to the qualitative researchers? And finally, what happens when a qualitative researcher identifies a new positioning movement that was previously un-coded? Do you think this will tool will indirectly constrain what types of behaviors qualitative researchers look for in their video analysis?

Nigel Bosch

Assistant Professor

Great questions! We have some future plans to explore exactly how qualitative researchers can take advantage of the tool; for the scope of the current grant project, the tool is a bit too much of an early prototype. But eventually, we plan to explore the tool as a sort of filtering mechanism to help qualitative researchers find interesting and relevant parts of large video datasets, which might consist of visualizations of behaviors over time and key moments in time that could fit with a particular behavior. This may indeed somewhat constrain the types of behaviors qualitative researchers examine, but at the same time it might expand the types of behaviors they examine, if they were not already looking for these types. For new qualitative researchers, such as research students, it might be especially effective for pushing them in new (to them) directions.

Paola Sztajn

This is so interesting! In our work on discourse, it is definitely the case that when teachers remove themselves from the center of the classroom, for example, it encourages student discussion. I look forward to learning about what you are learning.

Paul Hur

Yes, absolutely. We are also looking at how the encouragement of student discussion in the absence of immediate teacher presence (as you had mentioned) relates to shifts in students' physical movement. Maybe the students become more relaxed, and more expressive with hand gestures or head movements? Would love to hear your thoughts based on you and your team's observations from analyzing math classroom discourse!

Jeremy Roschelle

Executive Director, Learning Sciences

Hi all, congrats on all you've accomplished -- this is difficult stuff. Last week I watched Coded Bias on Netflix and so now I can't help but wonder what issues of Bias in the visual algorithms you may have found -- and how are you protecting against bias?

Also, are you aware of Jacob Whitehall's work? He has done some nice stuff with body posture as capture in a corpus of pre-schooler videos. see for example this paper

Keep up the good work or one could say... um.... lean in!

Joshua Rosenberg

Paul Hur

That is a great and important question regarding the bias in the visual algorithms, but one that I'm not sure I can sufficiently answer (perhaps another person more knowledgeable could jump in, if available!). I will say, though, within our application of OpenPose's pose detection methods, we are able to see some limitations of the algorithms. Characteristics present in the low camera angles of the classroom videos, such as the occlusion of certain students in the back of the class, and the density/close proximity of individuals' bodies, lead to inconsistent pose detections in the OpenPose output. It is difficult to confidently say whether this is due to biases present, or even tell in which situations (certain contrast, brightness, etc.) the inconsistencies tend to manifest, since there are instances when pose detection fails (for a few frames) even when it has been working up fine up to that point. While it is difficult to account and protect individuals against these situations, we hope to eventually explore more privacy options for individuals who do not want to be included in the detection output.

Thank you for linking that paper -- I have not seen it before! We are aware of Jacob Whitehill's other work and are inspired by it and other work in the area of developing automatic methods for analyzing classroom data.

Jeremy Roschelle

Daniel Heck

Really appreciate the project team sharing this great work. I am especially curious about how you've structured work on the same data between computational video analytics and human researcher methods of looking at video. When and why do you pass data, in both directions, between computer algorithmic analysis and human researcher/observers? What has worked well in this process? And what has been surprising or challenging?

Joshua Rosenberg

Assistant Professor, STEM Education

Hi Daniel, thanks for viewing our video and for asking about this - we're at a point in the project at which we'll soon be working to integrate output from the two methods (computational and human researcher-driven), but we haven't done this just yet. We do have some ideas about how we'll be doing this, some high-level and more at the conceptual stage for now, and some quite concrete/technical.

At a high level, we're curious about whether the computational output can serve as a starting point for qualitative analysts, such as by identifying segments of the data at which certain kinds of activities of interest might be more likely to occur. Then a qualitative analyst could investigate those segments in an in-depth way.Christina and project colleagues presented a paper at the most recent AERA conference on this and some other ways we can integrate the data.

At a concrete/technical level, we've discussed how selecting the time scale at which we'll join data (e.g., based on second or millisecond units) requires us to make certain decisions about what a meaningful unit of data is. But, we're very much working out these details now. I hope we can share more next year on what's working (and what's not) after we've explored this in greater depth.

thanks again for this!

Josh

Further posting is closed as the event has ended.