- Jessica Mislevy

- https://www.sri.com/about/people/jessica-mislevy

- Senior Researcher

- Presenter’s NSFRESOURCECENTERS

- SRI International

- Claire Christensen

- https://www.sri.com/about/people/claire-christensen

- Education Researcher

- Presenter’s NSFRESOURCECENTERS

- SRI International

- SHARI GARDNER

- Education Researcher

- Presenter’s NSFRESOURCECENTERS

- SRI International

- Sarah Gerard

- https://www.sri.com/about/people/sarah-gerard

- Education Researcher

- Presenter’s NSFRESOURCECENTERS

- SRI International

Developing a K-12 STEM Education Indicator System

2017 (see original presentation & discussion)

Grades K-6, Grades 6-8, Grades 9-12

STEM education has a major impact on economic prosperity. In 2012, Congress asked NSF to identify methods for tracking progress toward improvement in K-12 STEM education. In response, the National Research Council proposed a set of 14 progress indicators related to students’ access to quality learning, educators’ capacity, and policy and funding initiatives in STEM. The 14 indicators address three distinct levels of the education system: effective STEM instructional practices in the classroom; school conditions that support STEM learning; and policies that support effective schools and STEM instruction.

The K-12 STEM Education indicator system is designed to drive improvement, not to serve as a formal accountability system. The system can illuminate areas where education practices could be modified to improve the quality of K-12 STEM education, as well as improve issues of equity and access. While researchers have long measured educational system outputs, like student test scores and career choices, the U.S. has done little to measure the components in the “black box” of the education system that influence student achievement and career interests.

SRI International has supported efforts by NSF to further study and develop this set of indicators that can be used by policymakers, researchers, and practitioners to monitor components of K-12 STEM education and to guide improvements. This system of indicators has impacted research and theory about K-12 STEM education, providing a generative framework for other researchers and supporting the development of evidence that advances the field and supplies policymakers with information to guide policy decisions at the federal, state, and local levels.

Related Content for Measuring New Indicators for K-12 STEM Education

-

2016Underrepresented Minority Student Perspectives of Challenges

2016Underrepresented Minority Student Perspectives of Challenges

Shabnam Etemadi

-

2019Learning by Making for STEM Success

2019Learning by Making for STEM Success

Lynn Cominsky

-

2018KinderTEK: Mastering Math on an iPad Expedition

2018KinderTEK: Mastering Math on an iPad Expedition

Mari Strand Cary

-

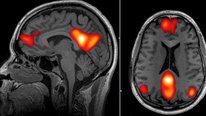

2016Cognitive and Neural Correlates of Spatial Thinking

2016Cognitive and Neural Correlates of Spatial Thinking

Emily Grossnickle

-

2017Cognitive and Neural Benefits of Teaching Spatial Thinking

2017Cognitive and Neural Benefits of Teaching Spatial Thinking

Emily Peterson

-

2021Preparing STEM Teachers to Support ELs During COVID

2021Preparing STEM Teachers to Support ELs During COVID

Cathy Lussier

-

2017Developing Adanced STEM High Schools in Egypt

2017Developing Adanced STEM High Schools in Egypt

F. Merlino

-

2017SciGirls Profiles: Real Women, Real Jobs

2017SciGirls Profiles: Real Women, Real Jobs

Rita Karl

Sarah Gerard

Education Researcher

Thank you for taking the time to watch our video! The research team would love your feedback, especially to the following two questions:

How could you envision your organization using data or data collection tools from the indicator system?

How could the indicator system be useful in supporting policy discussions and educational decisions?

William McHenry

Executive Director

A system of K-12 education indicators to assist educators improve student learning in a formative manner was presented. This video discusses how to drive the use of common data to effectively drive student learning. This high-quality video challenges educators to get on the same page. I would like to see multiple states with diverse populations implement or assess these indicators. Do you think this can happen on a regional or national scale?

Jessica Mislevy

Senior Researcher

Thanks for the comment, William! We’d love to see states adopt these or similar indicators as well. One leading example is Minnesota; the state independently developed a similar system of indicators for STEM education, and we understand that the effort has been very successful so far to monitor critical data in their state and inform state policy decisions. More info here: http://www.mncompass.org/education/stem/overview. Other states have worked with researchers studying the STEM Indicators to collect and make the data useful at the state level. For example, Rolf Blank at NORC developed an online reporting system for capturing data on the STEM Indicators related to state assessment policies in science and math. Twenty states participated last year and that number continues to grow. More info here: stem-assessment.org.

Jake Foster

As a policy person having worked in state government, I can certainly see the application and potential of these indicators and appreciate the systemic focus you have brought to this project. I am, however, a little unclear about the role or goal of the different components of your particular study. Are collaborators in this project working to validate or build an evidence base for a select set of these indicators? Or is the project taking as given that these indicators are strong and collaborators are working to embed them in policy contexts?

SHARI GARDNER

Education Researcher

Thank you, Jake, for your comment and question! The answer is it's both. The NRC committee intended the development of the indicator system to be ongoing. The charge was to build the system incrementally rather than wait for all data collection measures to be available. At the time the indicators were proposed, the NRC committee recognized that they were at different stages of development. Some indicators could be measured, at least in part, by existing data. Others could be measured with modest edits or additions to existing surveys, such as those conducted by the National Center for Education statistics. We outline these sources in SRI's roadmap, available at http://stemindicators.org/roadmap/. Yet still some of the indicators required further conceptual work and some preliminary research before a data collection plan to measure them validly and reliably at scale could be solidified. Recognizing this, NSF issued a Dear Colleague Letter to fund a series of EAGER grants to advance knowledge about how to measure the indicators. Three of these projects are featured in our video, and more information about these and the other projects can be found here: http://stemindicators.org/stem-education-resear....

Heidi Schweingruber

Director

It's probably not surprising that I'm very excited about this video. It is wonderful to see how the portfolio of work based on the National Academies report is evolving. I'd love to hear about which group of indicators has been the most challenging to develop. Were there any unanticipated hurdles? Is the development effort at a point yet where you are able to collect data and begin to see trends?

Sarah Gerard

Education Researcher

Glad to hear it, Heidi! Certainly as you know from Monitoring Progress (https://www.nap.edu/catalog/13509/monitoring-progress-toward-successful-k-12-stem-education-a-nation?utm_expid=4418042-5.krRTDpXJQISoXLpdo-1Ynw.0), some of the 14 indicators required more development than others. A number of the DCL researchers funded by NSF (http://stemindicators.org/stem-education-researchers/dclprojects/) focused on indicators 4, 5, and 6 (Adoption of instructional materials in grades K–12 that embody rigorous, research-based standards; Classroom coverage of content and practices in rigorous, research-based standards; Teachers’ science and mathematics content knowledge for teaching). These indicators in particular required a good deal of work to figure out how they could be measured—and we as a field are still wrestling with questions about how to capture data on these indicators feasibly and cost-effectively at scale. We’re pleased that some of these projects have yielded some amount data already. More details on data collection development can be found on our Roadmap: http://stemindicators.org/roadmap/.

Shuchi Grover

Great work, Jessica, Sarah, and team!

As a researcher working in computer science, I'm very interested in learning how we can bring computer science into this STEM indicators work. I realize that it's early days yet with states and districts just about starting rolling out CS at the various school levels, but I also wonder if having some "early" indicators may help shape and inform how districts and states actually implement CS. Would love to hear your thoughts on this!

Jessica Mislevy

Senior Researcher

Thanks, Shuchi, for raising this question. At the time the indicator system was conceptualized, the NRC committee decided to focus on the science and math parts of STEM because the bulk of the research and data concerning STEM education at the K-12 level relates to mathematics and science education; research in technology and engineering education was not as mature because the teaching of those subjects was less prevalent in K-12 (read more about their process here: https://www.nap.edu/catalog/13158/successful-k-12-stem-education-identifying-effective-approaches-in-science). Recognizing this, however, the committee did not envision the indicators as static. They were designed to measure aspects of STEM teaching and learning that, to the best of our current knowledge, can enhance students' interest and competencies in STEM. It is likely that needs will emerge for updated or additional indicators as the empirical research base continues to grow in areas like computer science, technology, and engineering. We welcome ideas from researchers like you working in this area for what those potential “early” indicators might be!

Kathy Kennedy

I am excited to learn more about the STEM indicators and I am wondering if any groups are using them to inform PD for teachers at the pre-service or in-service levels?

Sarah Gerard

Education Researcher

Hi Kathy – great question! We think that this would be an excellent use of some of the indicators, particularly Indicator 6 (Teachers’ science and mathematics content knowledge for teaching) and Indicator 7 (Teacher participation in STEM-specific PD). You may be interested in the concept paper that Suzanne Wilson of the University of Connecticut wrote last year exploring some of the policy implications of the indicator system, Measuring the Quantity & Quality of the K-12 STEM Teacher Pipeline (http://stemindicators.org/concept-paper-2/).

Further posting is closed as the event has ended.